Please find steps below for configuring Declarative Pipeline - Pipeline as a code - Jenkinsfile.

Pre-requistes:

1. Project setup in Bitbucket/GitHub/GitLab

2. Jenkins and Tomcat (web container) set up.

3. Maven installed in Jenkins

4. Sonarqube setup and integrated with Jenkins

5. Artifactory configured and integrated with Jenkins

6. Slack channel configured an integrated with Jenkins

Create Jenkinsfile (pipeline code) to your MyWebApp

Step 1

Go to GitHub and choose the Repo where you setup MyWebApp in Lab exercise # 2

Step 2

Click on create new file.

Step 3 - Enter Jenkinsfile as a file name

Step 4

Copy and paste the below code and make sure what ever is highlighted in red color needs to be changed per your settings.

That's it. Pipeline as a code - Jenkinsfile is setup in GitHub.

rtMaven = null

server= null

pipeline {

agent any

tools {

maven 'Maven3'

}

stages {

stage ('Build') {

steps {

sh 'mvn clean install -f MyWebApp/pom.xml'

}

}

stage ('Code Quality') {

steps {

withSonarQubeEnv('SonarQube') {

sh 'mvn -f MyWebApp/pom.xml sonar:sonar'

}

}

}

stage ('JaCoCo') {

steps {

jacoco()

}

}

stage ('Artifactory Upload') {

steps {

script {

server = Artifactory.server('My_Artifactory')

rtMaven = Artifactory.newMavenBuild()

rtMaven.tool = 'Maven3'

rtMaven.deployer releaseRepo: 'libs-release-local', snapshotRepo: 'libs-snapshot-local', server: server

rtMaven.resolver releaseRepo: 'libs-release', snapshotRepo: 'libs-snapshot', server: server

rtMaven.deployer.deployArtifacts = false // Disable artifacts deployment during Maven run

buildInfo = Artifactory.newBuildInfo()

rtMaven.run pom: 'MyWebApp/pom.xml', goals: 'install', buildInfo: buildInfo

rtMaven.deployer.deployArtifacts buildInfo

server.publishBuildInfo buildInfo

}

}

}

stage ('DEV Deploy') {

steps {

echo "deploying to DEV Env "

deploy adapters: [tomcat8(credentialsId: '268c42f6-f2f5-488f-b2aa-f2374d229b2e', path: '', url: 'http://localhost:8090')], contextPath: null, war: '**/*.war'

}

}

stage ('Slack Dev Notification') {

steps {

echo "deployed to DEV Env successfully"

slackSend(channel:'your slack channel_name', message: "Job is successful, here is the info - Job '${env.JOB_NAME} [${env.BUILD_NUMBER}]' (${env.BUILD_URL})")

}

}

stage ('QA Approve') {

steps {

echo "Taking approval from QA manager"

timeout(time: 7, unit: 'DAYS') {

input message: 'Do you want to proceed to QA Deploy?', submitter: 'admin,manager_userid'

}

}

}

stage ('QA Deploy') {

steps {

echo "deploying to QA Env "

deploy adapters: [tomcat8(credentialsId: '268c42f6-f2f5-488f-b2aa-f2374d229b2e', path: '', url: 'http://your_dns_name:8090')], contextPath: null, war: '**/*.war'

}

}

stage ('Slack QA Notification') {

steps {

echo "Deployed to QA Env successfully"

slackSend(channel:'your slack channel_name', message: "Job is successful, here is the info - Job '${env.JOB_NAME} [${env.BUILD_NUMBER}]' (${env.BUILD_URL})")

}

}

}

}

Step 5

That's it. Pipeline as a code - Jenkinsfile is setup in GitHub.

Click on commit to save into GitHub.

Create Pipeline and Run pipeline from Jenkinsfile

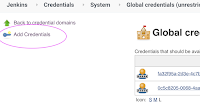

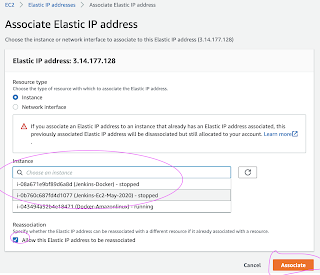

1. Login to Jenkins

2. Click on New item, give some name and choose Pipeline and say OK

3. Under build triggers, choose Poll SCM,

Enter H/02 * * * *

4. Under Pipeline section. click on choose pipeline script from SCM

5. Under SCM, choose Git

6. Enter HTTPS URL of repo and choose credentials - enter user name/password of GitHub.

Script path as Jenkinsfile

7. Click on Apply and Save

8. Click on Build now.

You should see pipeline running and application is deployed to Tomcat.